Installation

Pre-installation Steps

NetFlow and SNMP Analytics for Splunk App relies on *flow data processed by NetFlow Optimizer™ (NFO) and enables you to analyze it using Splunk® Enterprise or Splunk® Cloud.

To download a free trial of NetFlow Optimizer, please visit https://www.netflowlogic.com/download/ and register to receive the FREE trial key. Please see NetFlow Optimizer Installation and NetFlow Optimizer Administration Guide and follow instructions for your platform.

NetFlow data is sent to Splunk from NFO in syslog or JSON formats.

Weather you use Splunk Enterprise or Splunk Cloud, configure your Splunk Data inputs accordingly per your accepted best practices.

You need to install both NetFlow and SNMP Analytics for Splunk App and Technology Add-On for NetFlow:

- NetFlow and SNMP Analytics for Splunk App (netflow) (https://splunkbase.splunk.com/app/489/)

- Technology Add-On for NetFlow (TA-netflow) (https://splunkbase.splunk.com/app/1838/)

NFO can send data to Splunk using one of the folloiwng options:

- Directly on UDP input port of Splunk Indexer (OK for POC, but not recommended in production due to loss of data)

- Via Splunk Forwarder

- Via rsyslog / syslog-ng and Splunk forwarders

- Via Splunk HEC

- Via Splunk Connect for Syslog

Installation of App and TA

Install the App on your Splunk Search Heads

NetFlow and SNMP Analytics for Splunk App (netflow) is available here: (https://splunkbase.splunk.com/app/489/).

Several dashboards of the App rely on Force Directed App for Splunk for Topology View. Please make sure it is installed in your Splunk environment: https://splunkbase.splunk.com/app/3767/ to use Topology View.

This App requires the Technology Add-On for NetFlow (TA-netflow).

Install Add-on on your Splunk Search Heads, Indexers, and Heavy Forwarders

Technology Add-On for NetFlow (TA-netflow) is available here: (https://splunkbase.splunk.com/app/1838/). This Add-on collects *flow data processed by NetFlow Optimizer™ (NFO) software by NetFlow Logic, providing Splunk CIM compliant field names, eventtypes and tags for *flow data. The Add-on can be used with the App or with Splunk Enterprise Security.

Installing into Splunk Enterprise

Technology Add-on for NetFlow Installation

This TA enables you to ingest flow data into events index or metrics index.

Choose Events index if you need access to raw events. However, this option results in slower performance.

Choose Metrics index for better performance with large volumes of flow data, but you need to use mpreview or mstats command to search and view your data.

NFO output should be configured in JSON format for Metrics index

- Events Index

- Metrics Index

By default NetFlow Optimizer events will be stored in main index. In case you want to use another index, for example flowintegrator, go to Settings->Indexes and if the [flowintegrator] index is not present click on the New Index button, enter flowintegrator

and click Save.

Alternatively, if you have SSH access to your Splunk servers, please perform the following:

Create the $SPLUNK_ROOT/etc/apps/TA-netflow/local/indexes.conf file, and add the following lines to it:

[flowintegrator]

homePath = $SPLUNK_DB/flowintegrator/db

coldPath = $SPLUNK_DB/flowintegrator/colddb

thawedPath = $SPLUNK_DB/flowintegrator/thaweddb

Set sourcetype to flowintegrator In your Data inputs

Restart Splunk

To ingest flow data into metrics index please go to Settings->Indexes and click on the New Index button, enter netflow_metrics, select Index Data Type Metrics, and click Save.

Alternatively, if you have SSH access to your Splunk servers, please perform the following:Create the $SPLUNK_ROOT/etc/apps/TA-netflow/local/indexes.conf file, and add the following lines to it:

[netflow_mterics]

coldPath = $SPLUNK_DB/netflow_mterics/colddb

datatype = metric

enableDataIntegrityControl = 0

enableTsidxReduction = 0

homePath = $SPLUNK_DB/netflow_mterics/db

maxTotalDataSizeMB = 512000

thawedPath = $SPLUNK_DB/netflow_mterics/thaweddb

Set sourcetype to netflow_metrics in your Data inputs

Restart Splunk

This Add-on has the following settings in props.conf and transforms.conf. Edit these settings to match your requirements.

props.conf

[netflow_metrics]

INDEXED_EXTRACTIONS = json

LINE_BREAKER = ([\r\n]+)

NO_BINARY_CHECK = true

category = Log to Metrics

TRANSFORMS-extract_all_fields = extract_all_fields

METRIC-SCHEMA-TRANSFORMS = metric-schema:netflow_metrics

disabled = false

pulldown_type = true

KV_MODE = none

tranforms.conf

The following stanza in transforms.conf indicates which of the numeric fields should be treated as dimantions in metrics index:

[metric-schema:netflow_metrics]

METRIC-SCHEMA-MEASURES = _NUMS_EXCEPT_ nfc_id,src_port,src_asn,src_tos,src_vlan,dest_port,dest_asn,dest_tos,dest_vlan,protocol,input_snmp,output_snmp,ifIndex,ifName,ifType,ifDescr,row_num

You may see which fields are dimensions and which are metrics by running the following search:

| mcatalog values(_dims) values(metric_name) WHERE "index"="netflow_mterics"

NetFlow Analytics for Splunk App Installation

- Events Index

- Metrics Index

After installing NetFlow and SNMP Analytics for Splunk App, go to Settings->Advanced search->Search macros. Find [netflow_index] macro, click on it, and change the definition toindex=flowintegrator sourcetype=flowintegrator .

Alternatively, if you have SSH access to your Splunk servers, please perform the following:

Create the file if it does not already exist: $SPLUNK_ROOT/etc/apps/netflow/local/macros.conf, and add the following lines to it:

[netflow_index]

definition = index=flowintegrator sourcetype=flowintegrator

Restart Splunk for the changes to take effect.

After installing NetFlow and SNMP Analytics for Splunk App, go to Settings->Advanced search->Search macros. Find [netflow_metrics_index] macro, click on it, and change the definition toindex=netflow_metrics sourcetype=netflow_metrics .

Alternatively, if you have SSH access to your Splunk servers, please perform the following:

Create the file if it does not already exist: $SPLUNK_ROOT/etc/apps/netflow/local/macros.conf, and add the following lines to it:

[netflow_index]

definition = index=netflow_metric sourcetype=netflow_metrics

Restart Splunk for the changes to take effect.

Configure Universal Forwarder Input

Create or modify %SPLUNK_HOME%/etc/system/local/inputs.conf file as follows. In general there are two options, either to listen directly for netflow events on a specific port or optionally to monitor files created by syslog-ng or rsyslog.

Receiving Syslogs Directly from NFO (UDP port 10514)

Add the following lines to inputs.conf file and modify it for your netflow index, if necessary:

[udp://10514]

sourcetype = flowintegrator

index = flowintegrator

Configuring Universal Forwarder with syslog-ng or rsyslog

In this scenario syslog-ng or rsyslog are configured to listen to syslogs sent by NFO on a UDP port 10514. Syslog-ng or rsyslog are usually writing the logs into configurable directories. In this example we assume that those are written to /var/log/netflow.

Add the following lines to inputs.conf file and modify it for your netflow index, if necessary:

[monitor:///var/log/netflow]

sourcetype = flowintegrator

index = flowintegrator

It is very important to set sourcetype=flowintegrator and to point it to the index where Netflow Analytics for Splunk App and Add-on are expecting it.

Configure Universal Forwarder Output (Target Indexers)

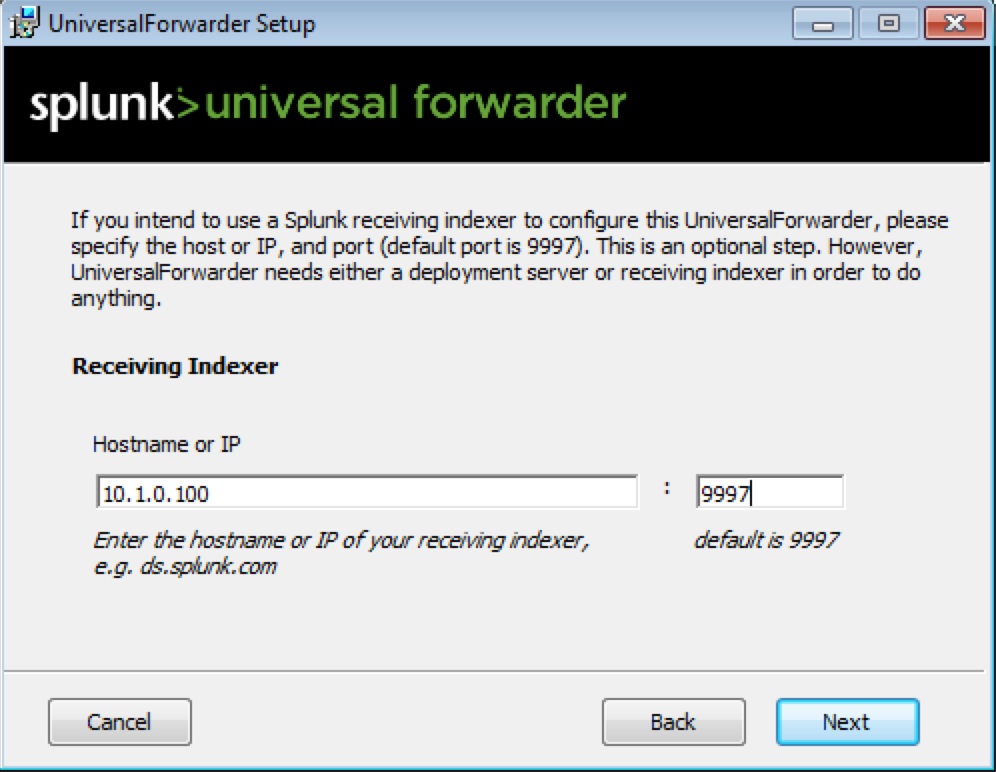

During the installation of the Universal Forwarders a Receiving Indexer can be configured, as it can be seen here:

It is an optional step during the installation. If it was not configured or if load balancing is required, additional Receiving Indexers can be added later by adding to the %SPLUNK_HOME%/etc/system/local/outputs.conf file:

[tcpout]

defaultGroup = default-autolb-group

[tcpout:default-autolb-group]

server = 10.1.0.100:9997,10.1.0.101:9997

More info about load balancing: http://docs.splunk.com/Documentation/Splunk/latest/Forwarding/Setuploadbalancingd#How_load_balancing_works

Installing into a Splunk Cloud Deployment

You must be a Splunk Cloud administrator to install and manage apps in your Splunk Cloud deployment. The procedure for installing apps and add-ons for use with your Splunk Cloud instance depends on the type of your Splunk Cloud deployment and the version of Splunk Cloud that you are running. Please visit Splunk Cloud Platform Admin Manual for details: https://docs.splunk.com/Documentation/SplunkCloud/latest/Admin/SelfServiceAppInstall.

Installing with Splunk Connect for Syslog (SC4S)

You can use Splunk Connect for Syslog (SC4S) as a forwarder between NFO and Splunk Enterprise or Splunk Cloud. This section describes how to install and configure SC4S and configure HTTP Event Collector (HEC).

Splunk HEC configuration

-

On Splunk add HEC data input: https://splunk.github.io/splunk-connect-for-syslog/main/gettingstarted/#configure-the-splunk-http-event-collector

a. Navigate to Settings > Data inputs > HTTP Event Collector > + Add new

b. Enter HEC name

c. On Input settings page don't select allowed indexes

d. Save HEC input

-

If HEC data input is disable, enable it or enable all tokens in the Global Settings

SC4S Installation and Configuration

-

Install Docker Engine. For example, instruction for centos: https://docs.docker.com/engine/install/centos/

-

Configure UDP receive buffer size and enable packet forwarding for IPv4. Edit

/etc/sysctl.conf:net.core.rmem_default = 17039360

net.core.rmem_max = 17039360

net.ipv4.ip_forward=1 -

Save and apply to the kernel:

sysctl -p -

Do following steps for container and systemd configuration: https://splunk.github.io/splunk-connect-for-syslog/main/gettingstarted/docker-systemd-general/

-

Configure sc4s environment

/opt/sc4s/env_file:

SC4S_DEST_SPLUNK_HEC_DEFAULT_URL=https://<splunk_host>:8088

SC4S_DEST_SPLUNK_HEC_DEFAULT_TOKEN=<hec-token-value>

#Uncomment the following line if using untrusted SSL certificates

SC4S_DEST_SPLUNK_HEC_DEFAULT_TLS_VERIFY=no

SC4S_LISTEN_NETFLOWLOGIC_NFO_UDP_PORT=10514

-

Configure filter to distinguish NFO syslogs. Put following content into

/opt/sc4s/local/config/app_parsers/app-nfo.conffile:#

# Copyright (C) 2021 NetFlow Logic

# All rights reserved.

#

block parser nfo-parser() {

channel {

rewrite {

# set defaults these values can be overidden at run time by splunk_metadata.csv

r_set_splunk_dest_default(

index("flowintegrator")

source("sc4s:nfo")

sourcetype("flowintegrator")

vendor_product("netflowlogic_nfo")

template("t_msg_only")

);

# add nfo_hostname fields

set("${HOST}", value("fields.nfo_hostname"));

# remove nfc_id from the message

subst('nfc_id=(\d+) ', '', value("MESSAGE") flags(store-matches));

# add nfc_id field

set("$1", value("fields.nfc_id") condition("$1" ne ""));

};

};

};

application netflowlogic_nfo[sc4s-syslog] {

filter {

"${PROGRAM}" eq "NFO";

};

parser { nfo-parser(); };

}; -

Start SC4S:

systemctl start sc4s -

Configure NFO output:

<sc4s_host>:10514

Useful Commands

-

start/stop/restart SC4S:

systemctl start sc4ssystemctl stop sc4ssystemctl restart sc4s -

To check docker logs:

docker logs SC4S -

To validate SC4S status on Splunk side search following:

index=* sourcetype=sc4s:eventsindex=* sourcetype=sc4s:events "starting up" -

To find NFO syslogs:

index="flowintegrator" source="sc4s:nfo"