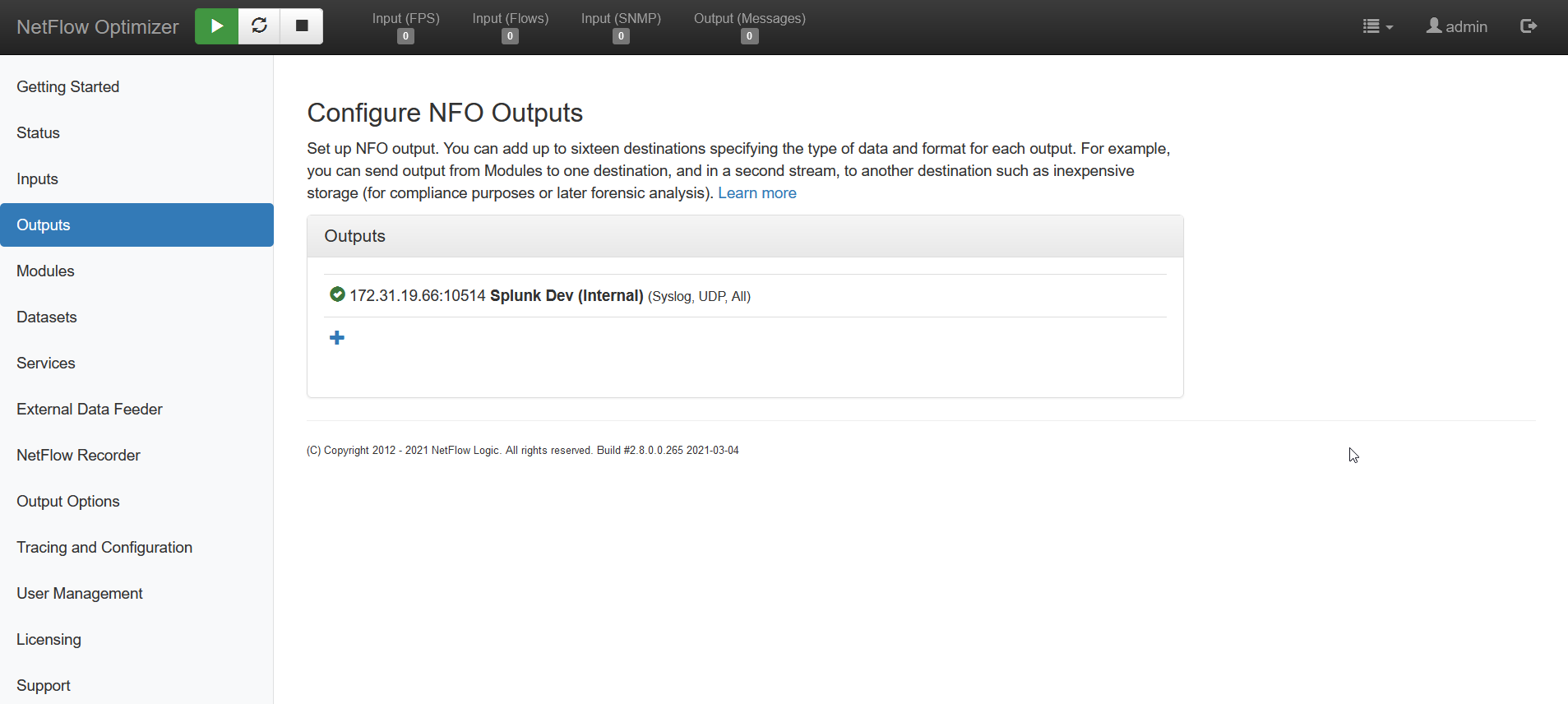

Configure Outputs

You may add up to sixteen output destinations, specifying the format and the kind of data to be sent to each destination. Click on the ‘plus’ symbol to add data outputs.

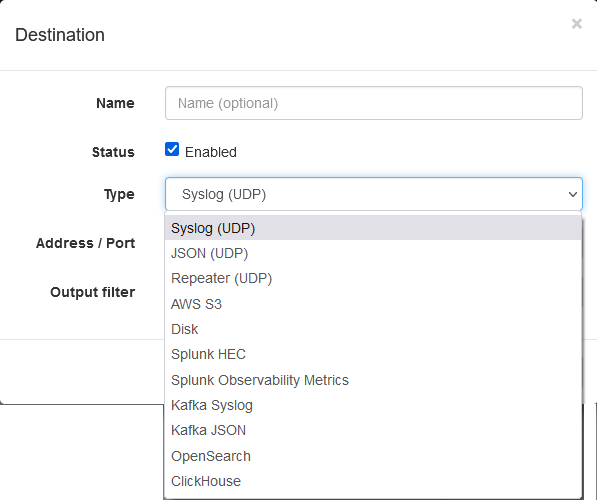

Output Types

NFO supports the following types of outputs:

| Type | Description |

|---|---|

| Syslog (UDP) | Indicates the destination where data is sent in syslog format |

| Syslog (JSON) | Indicates the destination where data is sent in JSON format |

| Repeater (UDP) | Indicates that flow data received by NFO should be retransmitted to that destination, e.g your legacy NetFlow collector or another NFO instance |

| AWS S3 | Indicates the destination is AWS S3 buckets |

| Disk | Indicates the destination is a disk |

| Splunk HEC | Indicates that the destination is Splunk HEC. NFO sends data to Splunk HEC in key=value format |

| Splunk Observability Metrics | Indicates that the destination is Splunk Observability Metrics (SingalFX) |

| Kafka Syslog | Indicates the destination is Kafka in Syslog format |

| Kafka JSON | Indicates the destination is Kafka in JSON format |

| OpenSearch | Indicates the destination is OpenSearch (e.g. Amazon OpenSearch Service) |

| ClickHouse | Indicates the destination of your ClickHouse database |

Syslog (UDP) and JSON (UDP)

Use these output types to send data in syslog or JSON format over UDP protocol.

| Parameter | Description |

|---|---|

| Address | Destination IP address or host where NFO sends syslog or JSON messages |

| Port | UDP port number |

Repeater (UDP)

Please note that Repeater option is available only on Linux platforms.

This output type should be used if you want to retransmit binary flow records (NetFlow, sFlow, IPFIX, etc.) to another destination, such as your legacy NetFlow Collector or another NFO instance.

| Parameter | Description |

|---|---|

| Address | Destination IP address or host where NFO retransmits binary flow records to |

Please note that Repeater option allows you to specify the IP address, but not the destination port. This feature was designed so NFO can be "inserted" between NetFlow exporters and legacy NetFlow collectors. NFO will use the input port number and the exporter IP address when forwarding the original message received from the exporter. If you use this type of output NFO should be installed under root user.

AWS S3

Use this output type to send NFO data to Amazon S3 buckets.

When NFO writes output to AWS S3, the file is closed when one of the following occurs:

- The number of bytes in the file reached the buffer size parameter

- The file has the number of records specified in chunk size parameter

- On file rotation interval timeout

| Parameter | Description |

|---|---|

| Credentials File | Absolute path to the AWS credentials file, e.g. root/.aws/credentials. See below |

| Bucket Name | Name of S3 bucket to send data to. You need to create this bucket in your AWS environment |

| NFO Folder | Name of AWS S3 folder (directory). This folder will be created by NFO |

| NFO Filename | Filename pattern used when S3 files are created, e.g nfo.log.gz creates S3 files as timestamp-nfo.log.gz |

| Output Buffer Size | Output buffer size, bytes. Min - 32768, max - 16777216, default - 4194304 |

| Output Chunk Size | S3 output file chunk size, records. Min - 1, max - 1000000, default - 100000 |

| Output rotation interval | S3 output file rotation interval, msec. Min - 1000, max - 3600000, default - 30000 |

Create an AWS credentials file, e.g. credentials. It should be placed on the machine where EDFN is installed. Use the IAM User public and secret access key to create a file as follows:

[account_1]

aws_access_key_id = your_access_key_id

aws_secret_access_key = your_secret_access_key

..........

[account_N]

aws_access_key_id = your_access_key_id

aws_secret_access_key = your_secret_access_key

Change file permissions to read only for root user (if EDFN is running as root): chmod 400 credentials. The Agent reads the file and takes all profiles from it. The Agent expects that each account has only one profile.

Set path to this file, for example: /root/.aws/credentials

Disk

Use this output type to send NFO data to a disk.

| Parameter | Description |

|---|---|

| Output File Folder | Path to a folder where output files are created |

| Output File Name | File pattern to be used in file name. Default is nfoflow. Default file name is yyyy-mm-dd_nfc_id_hash_hh-mm-ss-nfoflow.log |

| Output File Buffer Size, bytes | Disk output buffer size. Min - 32768, max - 16777216, default - 4194304 |

| Output File Chunk Size, flow records | Disk output file chunk size. Min - 1, max - 1000000, default - 100000 |

| Output File Rotation Interval, msec | Disk output file rotation interval. Min - 1000, max - 3600000, default - 30000 |

| Output File Flush Interval, msec | Disk output write interval. Default - 1000 |

Splunk HEC

Use this output type to send NFO data to Splunk HEC input.

| Parameter | Description |

|---|---|

| Protocol | HTTP or HTTPS |

| Address | Destination IP address where NFO sends events for Splunk HEC |

| Port | Destination port number |

| Access token | Splunk HEC Access token |

| Max batch size | Buffer size in bytes. When the number of bytes in the buffer reached its size NFO data is pushed out |

| Flush timeout | Time in msec when NFO data is sent out, even if the batch size is not reached its maximum |

Splunk Observability Metrics

Use this output type to send NFO data to Splunk Observability Cloud product.

| Parameter | Description |

|---|---|

| Access token | Splunk Observability Cloud Access token |

| Realm | Splunk Observability Cloud realm |

| Source Name | NFO data source name, e.g. nfo |

| Report threads | Splunk Observability Cloud Report threads |

| Report interval | Splunk Observability Cloud Report interval, sec |

| Max body size | Buffer size in bytes. When the number of bytes in the buffer reached its size NFO data is pushed out |

| Socket timeout | Time in msec when NFO data is sent out, even if the max body size is not reached |

| nfc_id filter | List of NFO Logic Modules output, e.g. 20103 for NFO SNMP Polling Module - SNMP Custom OID Sets Monitor |

Kafka Syslog or Kafka JSON

Use this output type to send NFO data to Kafka in syslog format.

| Parameter | Description |

|---|---|

| Topic ID | Kafka topic name. This a required field. Topic can be a constant string or a pattern like nfo-topic-${nfc_id}, where ${nfc_id} is substituted from the syslog or json message |

| Record key | This is an optional parameter. If specified it is a pattern string with variables like ${nfc_id} or ${exp_ip} or any variable from the syslog/JSON message |

| Properties file (*) | Absolute path to Kafka producer properties file. For example /opt/flowintegrator/etc/kafka.properties |

| Report threads | Output threads count (default is 2). This is the number of threads allocated to receive messages produced by NFO and sent to Kafka |

| Report interval | Time interval in seconds between report threads executions (default is 10) |

| nfc_id filter | Comma separated list of NFO Modules’ nfc_ids to be send to a Kafka broker. This is optional parameter, if not set, all messages are sent |

(*) Kafka properties file is read only when NFO Server is started or when some Kafka output configuration properties are changed. So if you want to change the properties in this file, you have to restart the NFO Server or rename the file and update output configuration.

Kafka properties file example:

client.id=nfo

bootstrap.servers=host1:port1,host2:port2,…

# SASL and SSL configuration

sasl.mechanism=PLAIN

security.protocol=SASL_SSL

sasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule required \

username="nfo" \

password="*****";

ssl.truststore.type=JKS

ssl.truststore.location=/opt/flowintegrator/etc/kafka_truststore.jks

ssl.truststore.password=*****

See https://kafka.apache.org/documentation/#producerconfigs for all available properties.

OpenSearch

Use this output type to send NFO data to OpenSearch (e.g. Amazon OpenSearch Service).

| Parameter | Description |

|---|---|

| URLs | comma separated list of https endpoints |

| Index | OpenSearch index name. This a required field. Index can be a constant string or a pattern like nfo-${nfc_id}-${time:yyyy.MM.dd}, where ${nfc_id} and ${time} are substituted from the json message. Time format is required and separated by a colon from the time field name. Patterns for formatting is available here: https://docs.oracle.com/en/java/javase/11/docs/api/java.base/java/time/format/DateTimeFormatter.html |

| Username | OpenSearch authentication usename. May be empty, if client certificate authentication is used |

| Password | OpenSearch authentication password. May be empty, if client certificate authentication is used |

| TLS client cert PEM file | Absolute path to the client certificate PEM file for authentication. May be empty, if username/password authentication is used |

| TLS client key PEM file | Absolute path to the client key PEM file. The key mast be password encoded. Field may be empty, if username/password authentication is used |

| TLS client key password | Client key password. This is a required field, when key file is provided |

| TLS trust certs PEM file | (optional) Absolute path to OpenSearch http endpoints certificates. May be empty, if certificates are signed using any global CA |

| Index template name | Template name inside OpenSearch. For more information, visit https://opensearch.org/docs/latest/opensearch/index-templates/ |

| Index template file | Absolute path to the json index template file. NFO is installed with template file ${nfo_home}/etc/opensearch-index-template.json |

| Report threads | Output threads count (default is 2). This is the number of threads allocated to receive NetFlow data messages produced by NFO and sent to OpenSearch |

| Report interval | Time interval in seconds between report threads executions (default is 10) |

| Max body size | Maximum message size in bytes. NFO combines several messages into one bulk request. Default is 4,000,000 |

| nfc_id filter | Comma separated list of NFO Modules’ nfc_ids to be send to OpenSearch. This is optional parameter, if not set, all messages are sent |

ClickHouse

Use this output type to send NFO data to ClickHouse open-source column-oriented database. For more information, visit https://clickhouse.com/

| Parameter | Description |

|---|---|

| Protocol | HTTP or HTTPS |

| Address | Destination IP address where NFO sends events to ClickHouse |

| Port | Destination port number |

Output Filters

You can set filters for each output:

| Output Filter | Description |

|---|---|

| All | Indicates the destination for all data generated by NFO, both by Modules and by Original NetFlow/IPFIX/sFlow one-to-one conversion |

| Modules Output Only | Indicates the destination will receive data only generated by enabled NFO Modules |

| Original NetFlow/IPFIX only | Indicates the destination for all flow data, translated into syslog or JSON, one-to-one. NetFlow/IPFIX Options from Original Flow Data translated into syslog or JSON, one-to-one, also sent to this output. Use this option to archive all underlying flow records NFO processes for forensics. This destination is typically Hadoop or another inexpensive storage, as the volume for this destination can be quite high |

| Original sFlow only | Indicates the destination for sFlow data, translated into syslog or JSON, one-to-one. Use this option to archive all underlying sFlow records NFO processes for forensics. This destination is typically configured to send output to inexpensive syslog storage, such as the volume for this destination can be quite high |