Deployment Architecture Considerations

Standalone vs. Distributed Deployment

-

Standalone: All NFO components (Server, Controller, and EDFN) on a single machine. Suitable for smaller to medium environments, evaluations, or centralized networks. Installation details are covered in the NFO Installation Guide.

-

Distributed (Conceptual Overview): For larger or geographically dispersed networks, consider a distributed architecture where the NFO Server(s) responsible for data collection and processing are separate from the NFO Controller (GUI and management). The Administration Guide will provide details on configuring and managing distributed deployments. This guide focuses on the initial deployment of the core components.

Organizations with multiple data centers, hybrid network environments, or those requiring horizontal scalability to process high volumes of NetFlow data (typically exceeding 250,000 flows per second) should consider a distributed architecture. This model involves deploying multiple NFO nodes and additional instances of EDFN to enhance processing capacity and data distribution.

Cloud vs. On-Premises Deployment

- On-Premises: Deploying NFO on your organization's physical or virtual infrastructure. Follow the Installation Guide for the chosen operating system.

- Cloud (Strategic Considerations): If deploying in the cloud (AWS, Azure, GCP), consider:

- Instance Selection: Choose appropriate virtual machine sizes based on the capacity planning outlined in the Administration Guide.

- Network Configuration: Configure cloud-based firewalls (Security Groups, Network Security Groups) to allow necessary inbound (flow data, GUI access) and outbound (to destination systems) traffic.

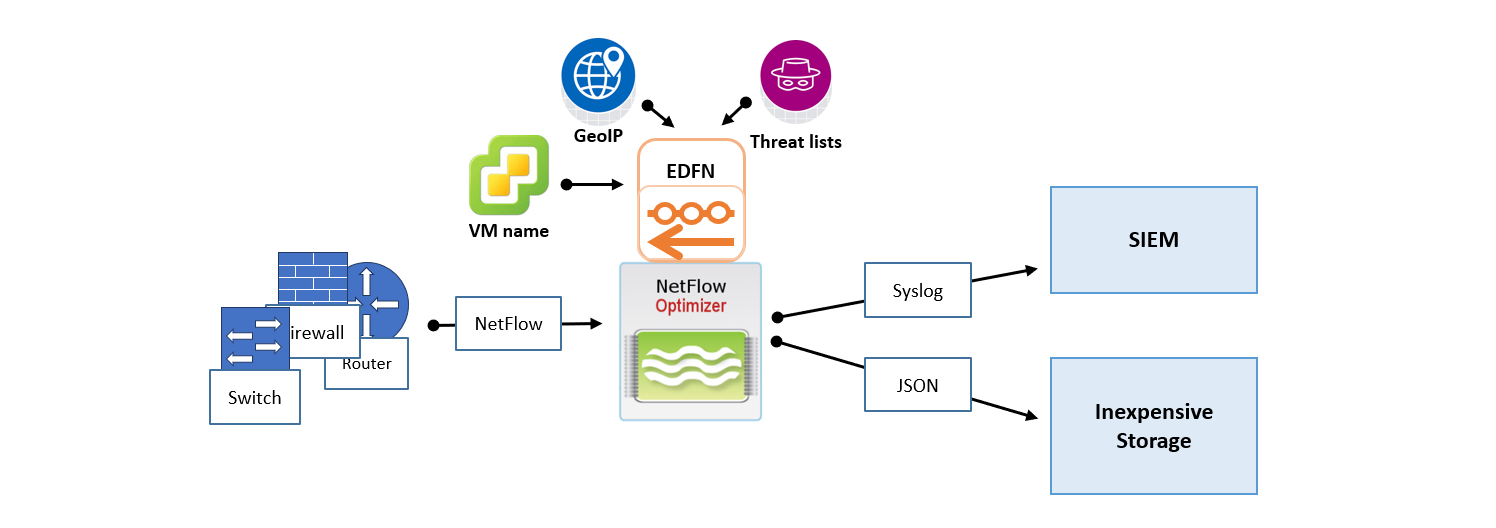

Single Instance Deployment

In this scenario, one instance of NetFlow Optimizer handles all flow data processing, enrichment, and SNMP polling. A single-instance deployment can be useful for evaluation purposes and might be sufficient to serve the needs of small to medium size organizations.

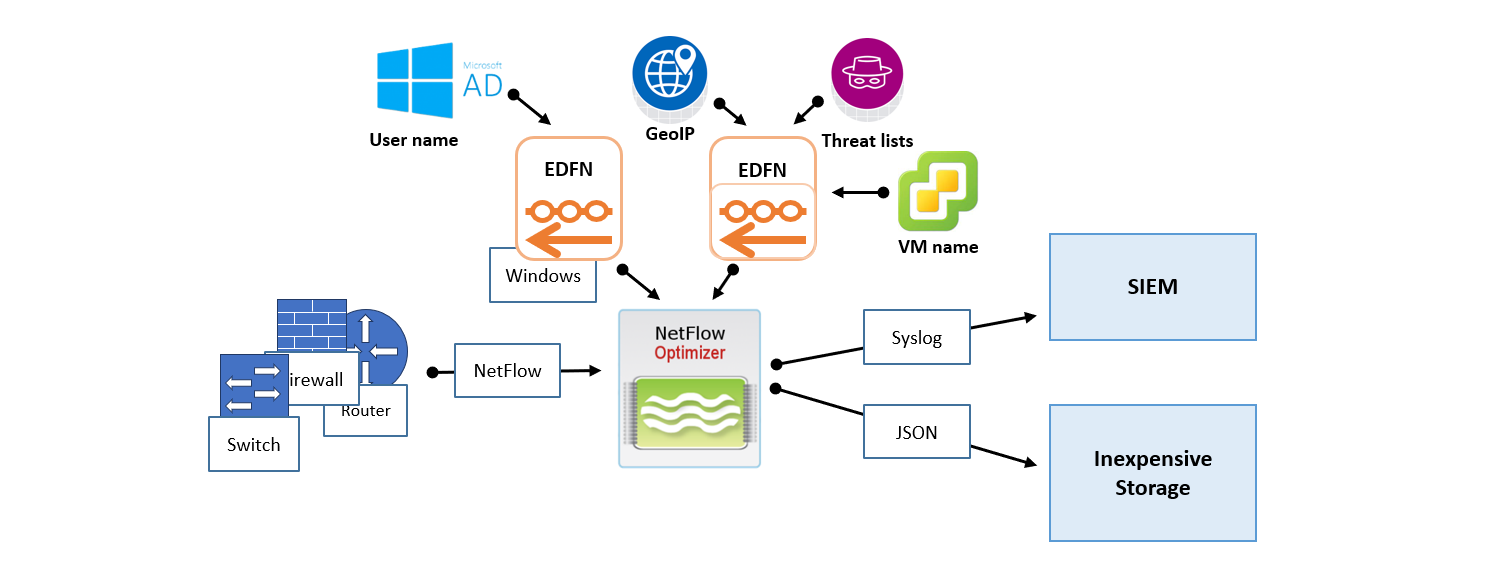

For NetFlow Optimizer (NFO) to integrate with Microsoft Active Directory, you will need to install the Windows version of the External Data Feeder for NFO (EDFN) on a Windows-based machine that is part of your Active Directory domain. Once installed, this EDFN instance should be configured to connect to your NFO system, enabling the necessary directory service integration.

Distributed Deployment on Premises

Consider a distributed deployment on your premises if you have multiple data centers or remote offices, require horizontal scalability for high-volume flow processing, or need to apply different processing rules or send data to various outputs based on device types or analytical needs. The following scenarios illustrate common distributed deployment models.

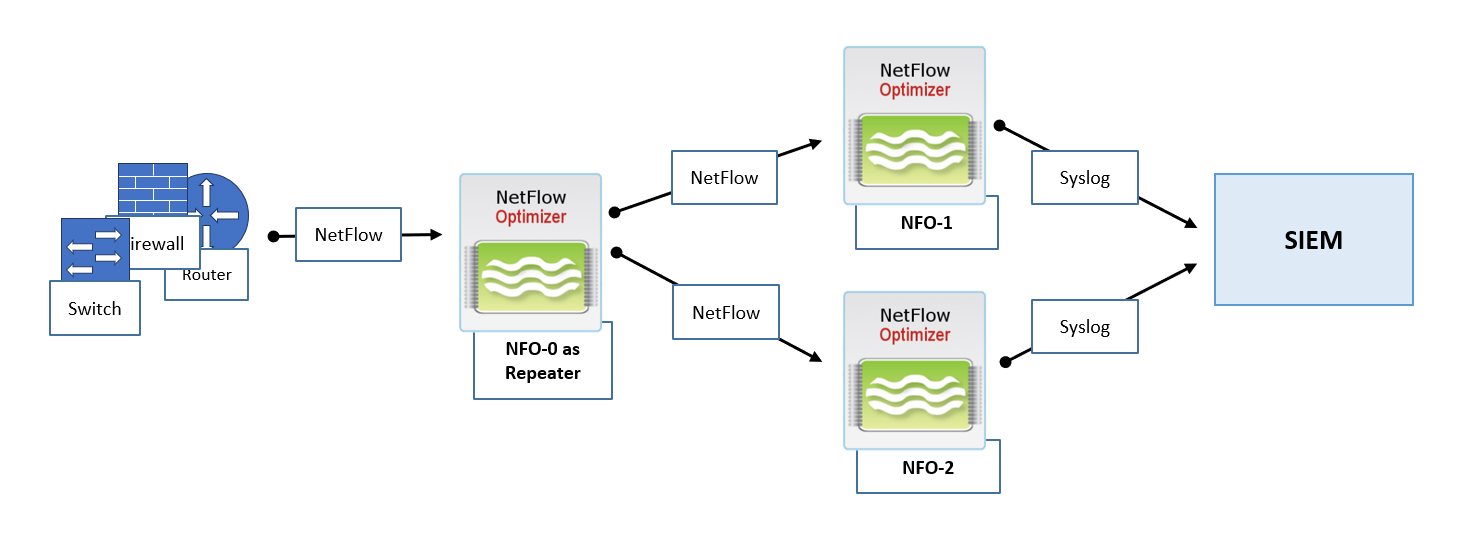

Distributed Deployment on Premises – Horizontal Scalability

This scenario addresses the need for increased processing capacity by distributing the workload across multiple NFO instances.

- Flow Ingestion: A central NFO instance, let's call it NFO-0, is configured to receive all NetFlow data from your network devices.

- Flow Replication and Distribution: NFO-0 utilizes its Repeater functionality to create copies of the original flow packets. It is configured with filtering rules to distribute this replicated data to two or more downstream NFO instances, such as NFO-1 and NFO-2. For example, NFO-0 can be set up to forward NetFlow from approximately half of your network devices to NFO-1 and the other half to NFO-2.

- Independent Processing: NFO-1 and NFO-2 independently process the NetFlow data they receive from their respective sets of devices, applying the necessary NFO Logic Modules and configurations.

- Centralized Output: Both NFO-1 and NFO-2 are configured to send their processed output data to the same central SIEM or analytics platform.

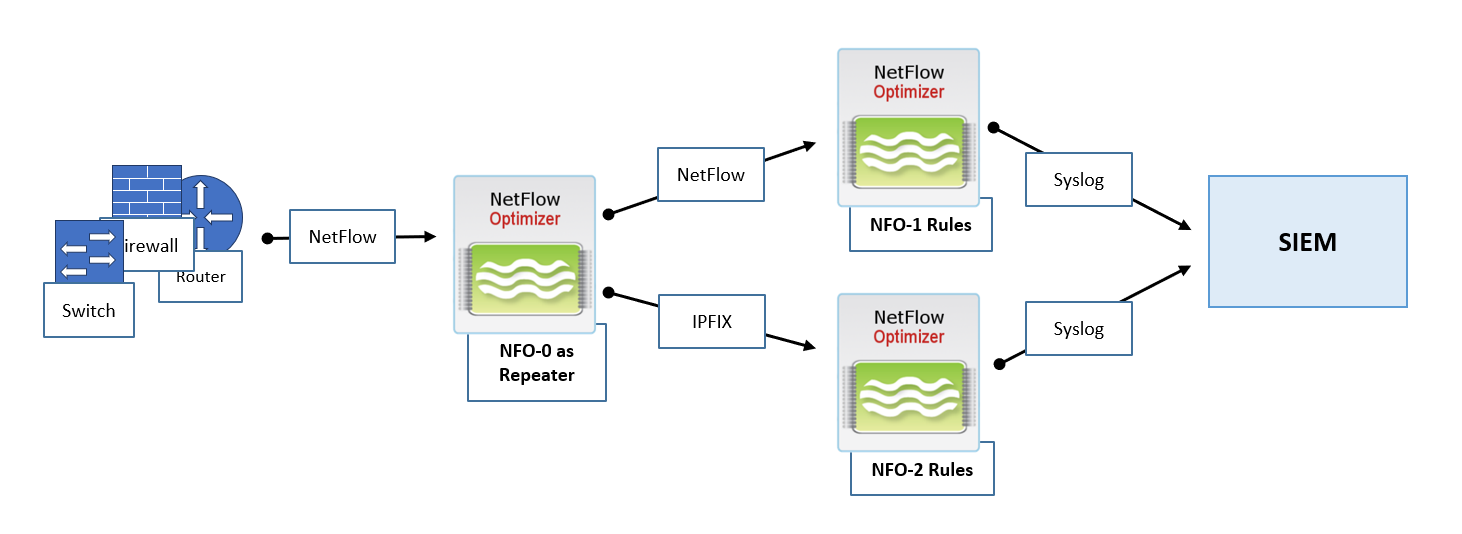

Distributed Deployment on Premises – Various Configurations

This scenario is ideal when you need to apply different sets of processing rules or configurations to flow data originating from different types of network devices.

- Centralized Flow Reception: A central NFO instance, NFO-0, is configured to receive all NetFlow data from your network infrastructure.

- Targeted Flow Forwarding: Using the Repeater functionality, NFO-0 is configured with filtering rules to forward flow data based on the originating devices. For instance, NetFlow from your core switches and routers (potentially reporting NFv5) is directed to NFO-1. Simultaneously, all traffic flows from your edge devices (potentially reporting in IPFIX format) are forwarded to NFO-2.

- Specialized Processing: NFO-1 is configured with NFO Logic Modules and rules specifically designed for processing flows from core switches and routers, such as focusing on top traffic analysis. NFO-2, on the other hand, is configured with modules and rules optimized for analyzing all traffic from edge devices, potentially including more detailed security analysis.

- Centralized or Separate Outputs: Both NFO-1 and NFO-2 can be configured to send their processed data to the same SIEM or to different destination systems based on the specific analytical goals for each type of device.

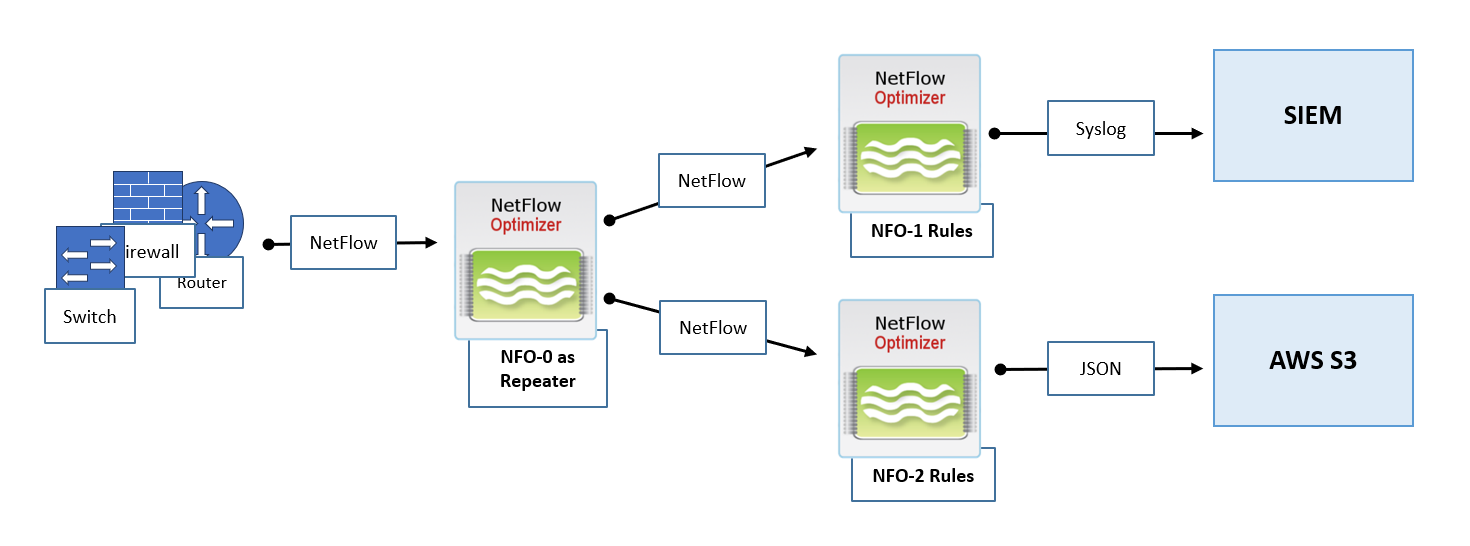

Distributed Deployment on Premises – Various Outputs

This scenario addresses the requirement to process all flow data with different sets of rules and send the resulting information to different types of storage or analysis platforms.

- Centralized Flow Ingestion: A central NFO instance, NFO-0, is configured to receive all NetFlow data from your network.

- Parallel Flow Processing and Replication: NFO-0 utilizes its Repeater functionality to duplicate the incoming flow data.

- Dedicated Processing and Output Streams:

- One stream of replicated flow data is forwarded to NFO-1. NFO-1 is configured with a specific set of NFO Logic Modules and rules optimized for generating data suitable for your primary SIEM, focusing on security and critical event detection. The output from NFO-1 is then sent to the SIEM.

- A second stream of replicated flow data is forwarded to NFO-2. NFO-2 is configured with a different set of NFO Logic Modules and rules, perhaps focusing on long-term traffic trends and overall network behavior. The output from NFO-2 is then sent to a more cost-effective storage solution, such as AWS S3, for archival and less immediate analysis.

These distributed deployment scenarios offer flexibility in how you leverage NetFlow Optimizer to meet your specific network monitoring, security, and analytical requirements. Remember to consult the NFO Administration Guide for detailed configuration instructions on Repeater functionality and managing multiple NFO instances.

Distributed Deployment in a Hybrid Environment

A hybrid environment, combining on-premises infrastructure with cloud resources, presents unique challenges and opportunities for network flow collection and analysis. NetFlow Optimizer (NFO) can be strategically deployed in such environments to provide comprehensive visibility across your entire infrastructure. Consider the following scenarios for collecting flow data from both your physical data center and your cloud resources, such as VPC Flow Logs.

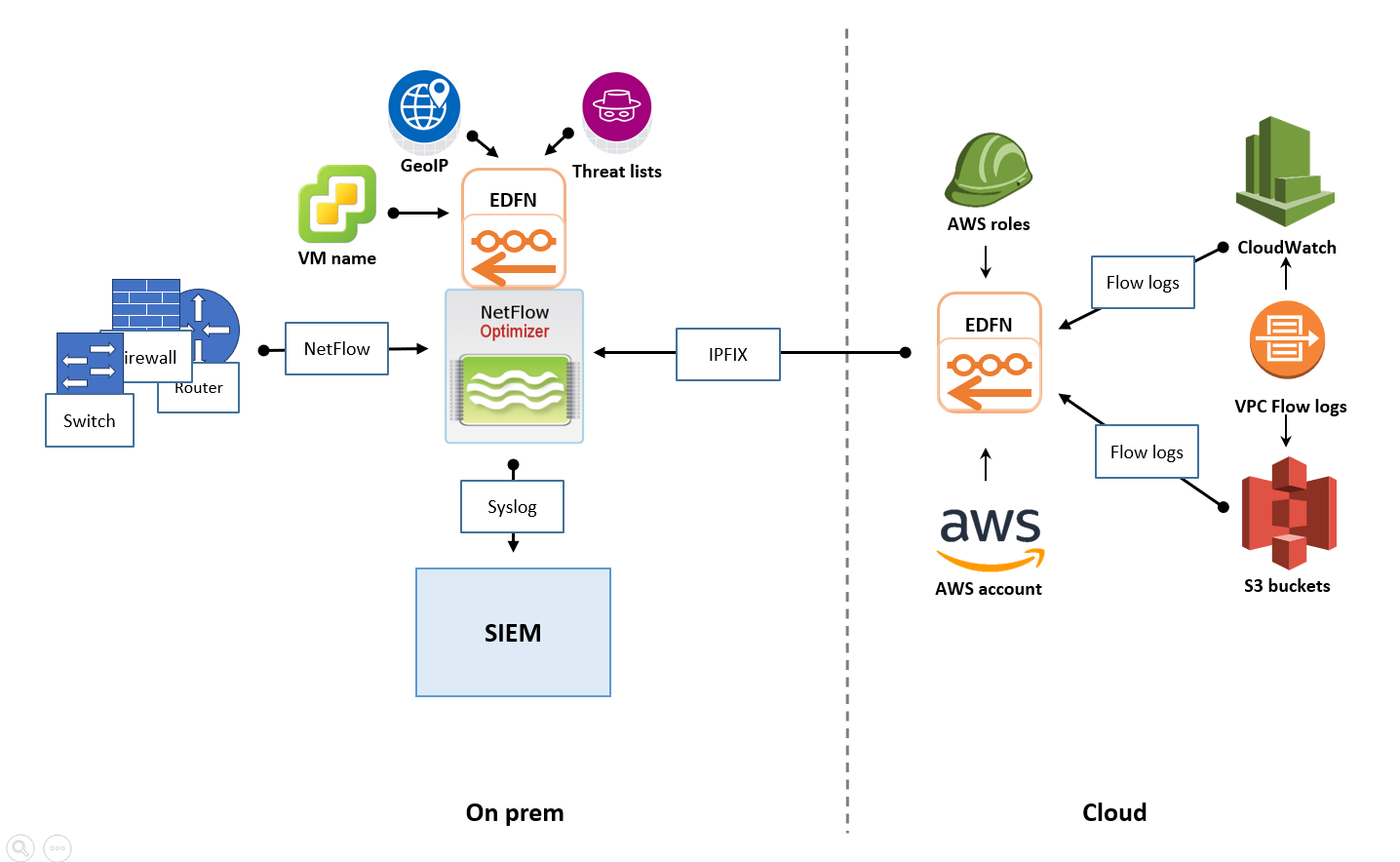

On-Premises SIEM with Cloud Flow Collection

This scenario addresses the case where your primary Security Information and Event Management (SIEM) system is hosted within your on-premises data center, and you need to collect flow data from both your physical network devices and your cloud-based Virtual Private Cloud (VPC) environments.

- On-Premises Flow Collection: Within your data center, you would deploy one or more NFO instances to collect NetFlow, IPFIX, or sFlow data from your physical switches, routers, firewalls, and other network devices, following the principles outlined in the "Distributed Deployment on Premises" section. These NFO instances would process the flow data according to your defined rules and configurations.

- Cloud Flow Collection: To gather flow logs from your cloud environment (e.g., AWS VPC Flow Logs, Azure Network Watcher flow logs, GCP VPC Flow Logs), you would typically deploy a separate EDFN instance within the cloud environment, ideally co-located within the same region as your VPCs. This cloud-based EDFN instance would be configured to ingest the native cloud flow logs, convert them to IPFIX, and send them to NFO instance insatalled on premises.

- Centralized Output to On-Premises SIEM: The key to this scenario is to ensure that the flow data collected and processed by both the on-premises NFO instances and the cloud-based EDFN instance is ultimately sent to your central SIEM located in your data center. This requires establishing secure network connectivity between your cloud environment and your on-premises network, allowing the cloud-based EDFN instance to forward its processed data to the SIEM. This might involve VPN tunnels or dedicated network connections.

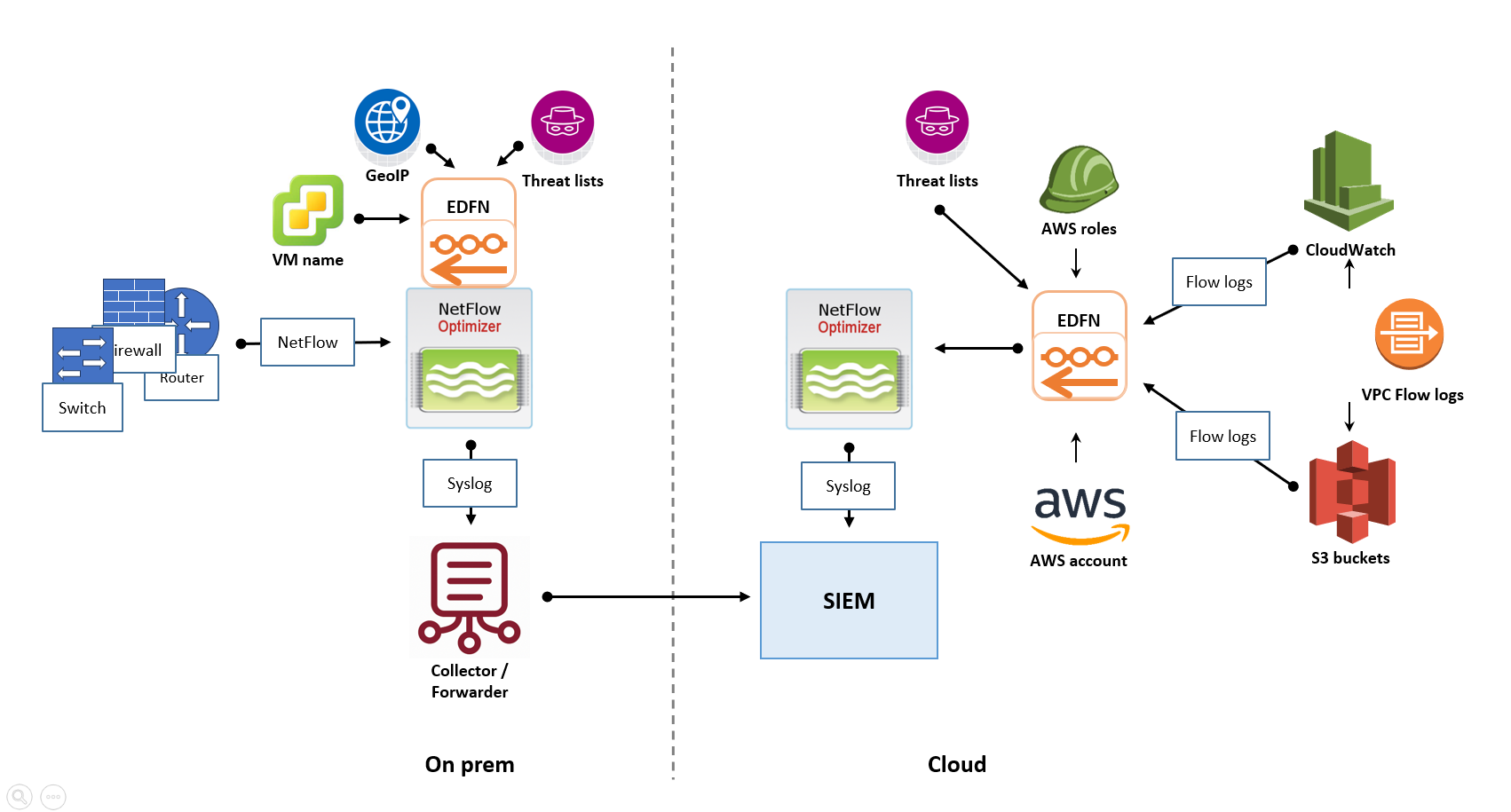

Cloud-Based SIEM with Hybrid Flow Collection

If your organization has adopted a cloud-first strategy and your SIEM solution is hosted in the cloud, the deployment architecture for NFO would shift accordingly. This scenario illustrates a recommended deployment approach in such cases.

- On-Premises Flow Collection and Forwarding: In your on-premises data center, you would still deploy one or more NFO instances to collect flow data from your physical network devices. However, instead of directly sending the processed data to an on-premises SIEM, these NFO instances would be configured to securely forward their output to your cloud-based SIEM. This requires establishing secure network connectivity from your data center to your cloud environment.

- Cloud Flow Collection: Similar to the previous scenario, you would deploy an NFO instance within your cloud environment, ideally near your VPCs, to ingest the native cloud flow logs. This cloud-based NFO instance would then directly forward its processed data to the cloud-based SIEM.

- Centralized Output in the Cloud: In this architecture, both the flow data originating from your on-premises network (processed by on-premises NFO) and the flow data from your cloud environment (processed by cloud NFO) converge and are analyzed within your cloud-based SIEM.

Key Considerations for Hybrid Deployments

- Network Connectivity: Establishing reliable and secure network connectivity between your on-premises environment and your cloud provider is crucial for successful hybrid flow collection. Consider bandwidth requirements and latency.

- Security: Ensure that all communication channels between NFO instances and your SIEM, whether on-premises or in the cloud, are secured using appropriate encryption and authentication mechanisms.

- Cost Optimization: When deploying NFO in the cloud, consider the cost implications of instance sizes, data transfer, and storage. Right-sizing your cloud-based NFO instances is important.

- Centralized Management: While you might have NFO instances in different locations, aim for a centralized approach to configuration and management where possible, leveraging the capabilities of the NFO Controller (even if one Controller manages geographically distributed Servers).

By strategically deploying NFO in a hybrid environment, you can gain comprehensive visibility into network traffic patterns and security events across your entire infrastructure, regardless of where your resources are located. Remember to consult the NFO Administration Guide for detailed configuration instructions on connecting NFO instances and forwarding data to various destinations.

High Availability (HA) Planning (Conceptual)

If high availability is a requirement, the High Availability Deployment section of the Administration Guide will detail the steps for configuring HA features.