Introduction

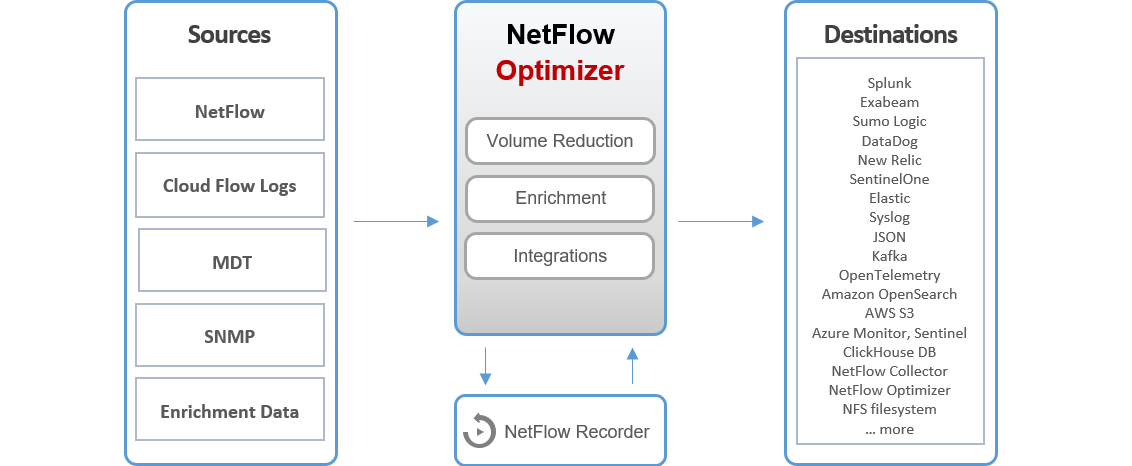

NetFlow Optimizer™ (NFO) is a high-performance, software-only processing engine designed to provide total visibility across hybrid network environments. NFO transforms massive streams of raw network data into high-fidelity, actionable intelligence before it reaches your SIEM or observability platform.

NFO acts as a vendor-agnostic middleware. It ingests data from physical, virtual, and cloud environments, optimizes it for cost-effective storage, enriches it with business context, and delivers it to your downstream systems in real time.

The Two Pillars of Data Ingestion

NetFlow Optimizer provides a 360-degree view of your network by unifying two critical data streams:

- Flow Optimization & Enrichment: NFO accepts NetFlow, IPFIX, sFlow, and J-Flow from network hardware, alongside Cloud Flow Logs from AWS, Azure, OCI, and Google VPC. It deduplicates and aggregates these records to ensure your SIEM receives only the most relevant data.

- Infrastructure Metrics: NFO actively polls devices via SNMP and Model-Driven Telemetry (MDT) for performance metrics and receives SNMP Traps for immediate event notification.

Core Value Propositions

Efficient NetFlow Volume Reduction

Modern networks generate a "NetFlow Tsunami" that can quickly overwhelm SIEM storage and inflate licensing costs. NFO intelligently reduces data volume by 80–90% without sacrificing the visibility required for security and compliance.

- Intelligent Aggregation: By summarizing similar flows and optionally excluding ephemeral client ports, NFO collapses hundreds of micro-flows into a single, high-value record.

- Deduplication: NFO identifies and removes redundant flow records caused by overlapping collection points, ensuring only unique information is stored.

- Flow Stitching: NFO reconstructs unidirectional flows into complete bi-directional conversations, providing a 360-degree view of interactions while reducing record counts by additional up to 50%.

- Top N Analysis: Automatically prioritize the most impactful traffic patterns (Top Talkers) to ensure your analysts see critical bandwidth consumers first.

The Importance of NetFlow Enrichment

Raw IP addresses are "naked" data—they provide the "how much," but not the "who" or "why." NFO transforms disparate connection data into a rich tapestry of context, making your flow data ready for advanced AI/ML analysis.

- User Identity: Link traffic to specific users via integrations with Active Directory, Okta, and Microsoft Entra ID.

- Threat Intelligence: Automatically flag communications with known malicious actors using real-time threat feeds.

- GeoIP & Location: Map traffic to physical locations and countries using industry-standard geolocation databases.

- Cloud & Virtual Context: Correlate flows with VM Names and Cloud Instance metadata (AWS/Azure/GCP) to simplify investigations in hybrid environments.

Leveraging Existing Investments

Organizations have already invested significant resources into their SIEM (Security) and IT Ops (Monitoring) platforms. NFO is designed to act as a high-performance "pre-processor" that feeds these systems enriched, high-fidelity data, breaking down silos and maximizing the ROI of your existing infrastructure.

- Native SIEM Integration: Seamlessly stream data into platforms like Splunk, Microsoft Sentinel, and CrowdStrike. Instead of a "rip and replace," NFO extends the life and performance of your current tools.

- Cross-Domain Correlation: By correlating flow data with server logs, application metrics, and security events, NFO provides a holistic view of IT incidents. A network traffic spike can be instantly linked to unusual login activity or application performance degradation.

- Unified Visibility: Centralize your investigation workflow. Security analysts gain network context for threat detection, while IT Operations teams gain deeper insights into connectivity-related performance issues—all within their familiar interfaces.

- Cost Management: By applying volume reduction before the data reaches the SIEM, you drastically lower ingestion and storage costs, allowing you to monitor more of your network for the same budget.

Multi-Group Infrastructure Insights

Traditional monitoring requires manual configuration for every new device, leading to visibility gaps and "monitoring fatigue." NFO’s unique Multi-Group Inheritance model automates infrastructure classification, ensuring "Zero-Touch" discovery and deep technical insights from day one.

- Hierarchical Inheritance: Devices automatically inherit monitoring profiles based on their position in the infrastructure hierarchy. A switch identified as "Cisco" + "Core" + "Layer 3" instantly receives the correct polling intervals and OID maps for all three categories.

- Zero-Touch Discovery: As new hardware is added to the network, NFO automatically detects the Vendor, Role, and Features. This eliminates the need for manual templates and ensures that no device remains unmonitored.

- Contextual Health Mapping: By classifying devices by their specific capabilities (e.g., Load Balancer vs. Firewall), NFO provides role-specific health metrics. This ensures that a "congested interface" alert is prioritized differently on a backbone trunk than on a standard access port.

- Dynamic Inventory Tracking: Maintain a real-time, self-updating inventory of your entire estate. NFO continuously audits device features and software versions, simplifying compliance reporting and hardware lifecycle management.

Why These Benefits Matter

The combination of NFO’s core technologies solves the most critical challenges in modern network observability:

- Operational Efficiency (The "Tsunami" Solution): By applying Intelligent Volume Reduction, you eliminate the "NetFlow Tsunami." This dramatically reduces SIEM ingestion fees and storage overhead, allowing you to retain longer data histories without breaking your budget.

- Accelerated Incident Response (The "Naked IP" Solution): Real-Time Enrichment ensures that incident responders never have to perform manual "IP-to-User" lookups during a crisis. By transforming "naked" IPs into meaningful business context, the time to identify and contain threats is reduced from hours to seconds.

- Unified Strategic Visibility (The "Tool Silo" Solution): Investment Leveraging allows you to monitor hybrid-cloud, virtual, and physical infrastructure through a single, optimized data stream. NFO breaks down data silos, ensuring your existing SIEM and IT Ops platforms become the "Single Source of Truth" for your entire estate.

- Zero-Touch Scalability (The "Manual Configuration" Solution): Multi-Group Infrastructure Insights remove the administrative burden of scaling. By automating device classification and inheritance, your monitoring environment grows organically with your network, ensuring 100% visibility with zero manual template management.

Summary of Value

| Technical Pillar | Problem Solved | Business Outcome |

|---|---|---|

| Volume Reduction | NetFlow Tsunami | 90% Cost Savings on storage and licensing. |

| Enrichment | "Naked" IP Context | Instant Attribution of users and threats. |

| Investment Leveraging | Tool Silos | Maximized ROI of existing SIEM/IT Ops tools. |

| Multi-Group Insights | Manual Configuration | Zero-Touch Maintenance and 100% visibility. |

How It Works: The NFO Processing Pipeline

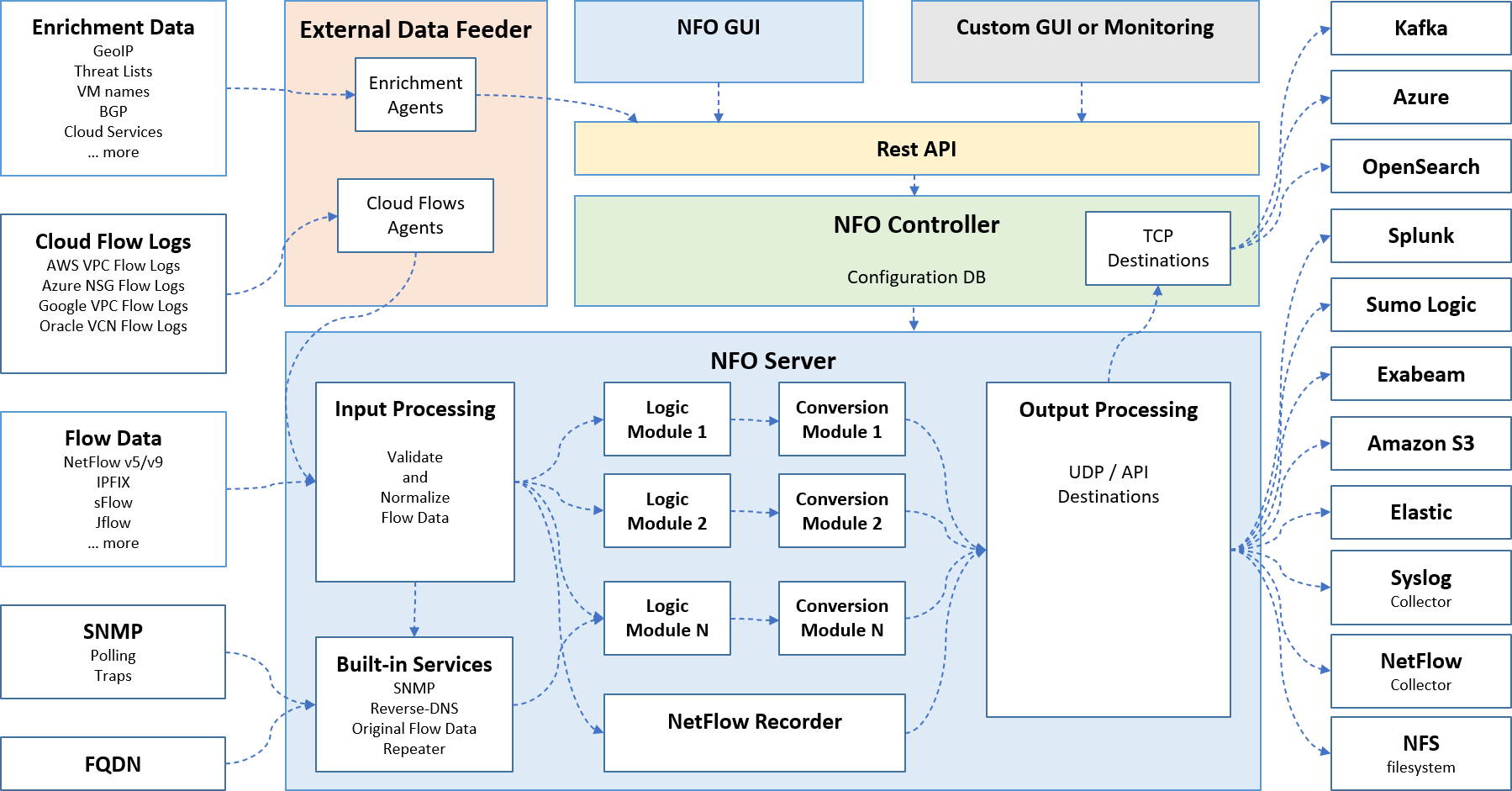

NetFlow Optimizer operates as a real-time stream processing engine. It follows a three-stage pipeline—Ingest, Process, and Export—to ensure that your monitoring tools receive high-context data at a fraction of the original volume.

Stage 1: Multi-Source Ingestion

NFO acts as a universal receiver for disparate network data formats, normalizing them into a single internal format for processing:

- Flow Data: Ingests NetFlow (v5/v9), IPFIX, sFlow, and J-Flow.

- Cloud Telemetry: Collects native flow logs from AWS, Azure, Google Cloud, and Oracle via the External Data Feeder.

- Infrastructure Metrics: Actively polls hardware via SNMP and receives passive SNMP Traps.

- External Context: Ingests FQDN and Enrichment Data (GeoIP, Threat Lists, VM names) to provide deeper visibility.

Stage 2: The Optimization & Enrichment Engine

At the heart of the NFO Server, raw data is validated and normalized before passing through specialized modules:

- Input Processing: Validates and normalizes all incoming flow data to ensure consistency.

- Built-in Services: Performs critical background tasks like SNMP correlation, Reverse-DNS lookups, and original flow data repetition.

- Logic & Conversion Modules: These modular components apply specific processing logic and convert data into optimized formats for downstream consumption.

- NetFlow Recorder: Provides the capability to log and store flow data for historical reference.

Stage 3: High-Fidelity Export

The Output Processing layer manages the delivery of enriched data to your specific destinations via UDP, TCP, or API:

- Managed Destinations: Direct integration with Splunk, Kafka, Azure, OpenSearch, and Sumo Logic.

- Storage & Analytics: Supports exports to Amazon S3, Elastic, Exabeam, and local NFS filesystems.

- Legacy Support: Can forward optimized data to traditional Syslog or NetFlow collectors.

Component Overview

| Component | Role |

|---|---|

| NFO Server | The core processing engine where validation, logic modules, and enrichment occur. |

| NFO Controller | Manages the configuration database and orchestrates the server's behavior. |

| External Data Feeder | Hosts agents for Enrichment Data and Cloud Flows to feed the main NFO Server. |

| NFO GUI / Rest API | The primary interfaces for administrators and external systems to configure and monitor the platform. |