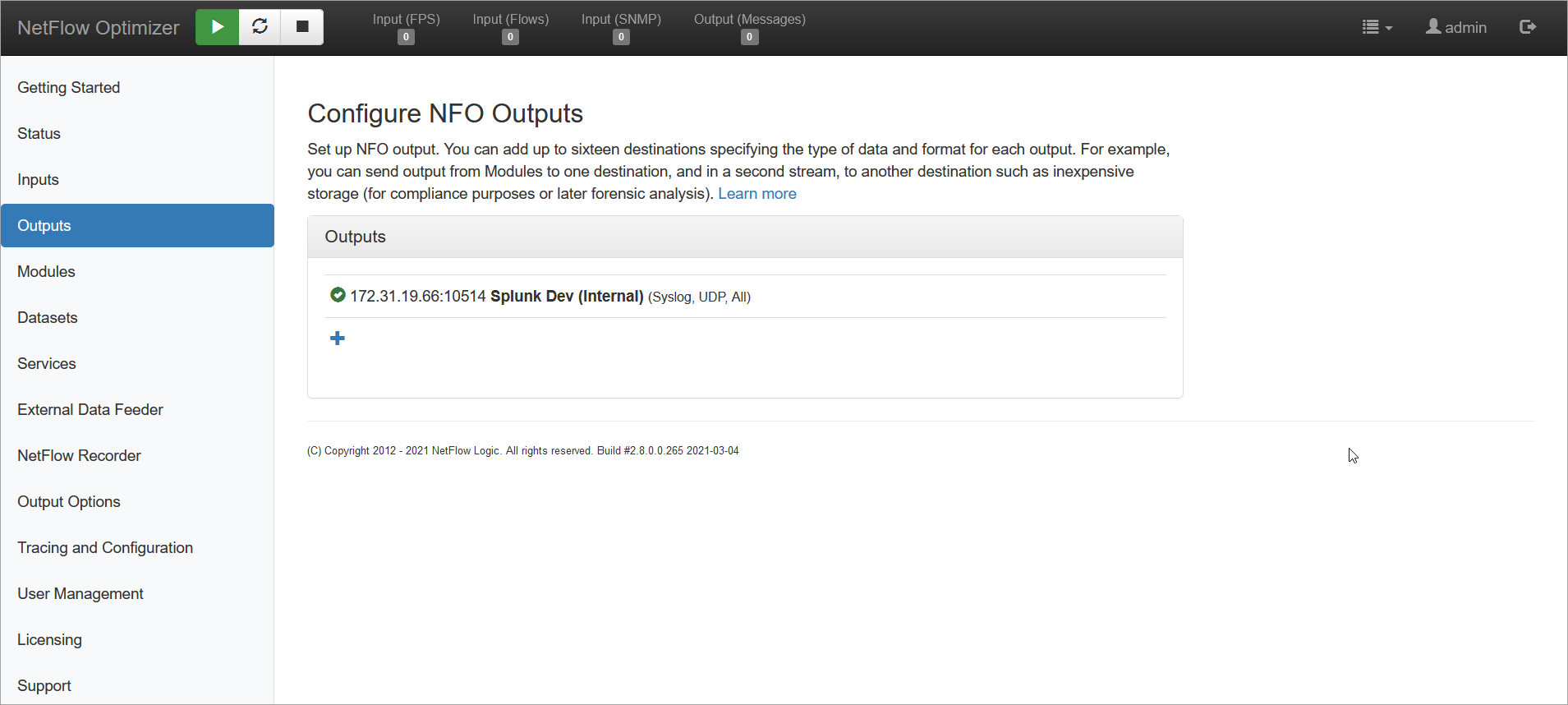

Configure Outputs

NetFlow Optimizer processes, enriches, and optimizes your network flow and telemetry data, making it ready for integration with a variety of downstream systems. NFO acts as a powerful data transformation and distribution hub, ensuring your valuable network intelligence reaches the platforms where it's most effective for analysis, security operations, and reporting.

NFO supports sending processed data to a wide range of destinations, including popular Security Information and Event Management (SIEM) systems, like Splunk or Exabeam, data lakes for long-term storage and big data analytics, AWS S3 buckets, and specialized observability pipelines like Axoflow and Cribl.

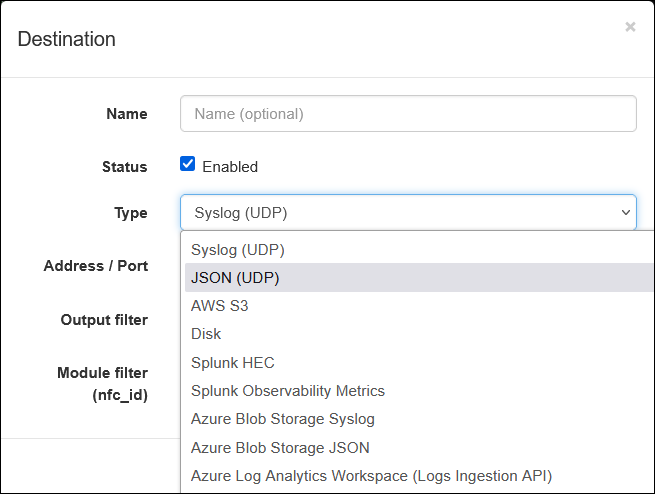

Click on the symbol to add data outputs and select desired Output Type from the following drp-down.

You may add up to sixteen output destinations, specifying the format and the kind of data to be sent to each destination. To configure specific output type, please refer to the corresponding sections in this guide:

NFO Output Types

| Output Type | Description |

|---|---|

| Syslog (UDP) | Sends enriched data in standard Syslog format to a remote destination via UDP. |

| JSON (UDP) | Sends enriched data in structured JSON format via UDP. |

| HTTP Event Collector (HEC) | Sends data via the HEC API. Fully compatible with Splunk HEC and CrowdStrike Falcon LogScale ingest tokens. |

| Azure Log Analytics Workspace | Direct ingestion into Microsoft Azure Log Analytics (supporting Azure Monitor and Microsoft Sentinel). |

| OpenTelemetry | Sends enriched telemetry to destinations supporting the OpenTelemetry (OTel) protocol. Recommended for Splunk Observability Cloud. |

| OpenSearch | Sends data to OpenSearch clusters, including Amazon OpenSearch Service. |

| Kafka (JSON or Syslog) | Streams data to Kafka topics in either structured JSON or Syslog format for enterprise data pipelines. |

| AWS S3 | Archives data to Amazon S3 buckets for long-term storage and compliance. |

| Azure Blob Storage (JSON or Syslog) | Archives data to Microsoft Azure Blob Storage in either JSON or Syslog format. |

| ClickHouse | Direct ingestion into ClickHouse high-performance analytical databases. |

| Repeater (UDP) | Retransmits raw flow data (NetFlow/IPFIX) to legacy collectors or secondary NFO instances. |

| Disk | Saves output data directly to the local file system for testing or troubleshooting. |

| Splunk Observability Metrics | (Deprecated) Legacy output for SignalFX. New deployments should use OpenTelemetry. |

Output Filters

If you have only one output, the Output filter is not applicable, so it will revert to All.

You can set filters for each output:

| Output Filter | Description |

|---|---|

| All | Indicates the destination for all data generated by NFO, both by Modules and by Original NetFlow/IPFIX/sFlow one-to-one conversion |

| Modules Output Only | Indicates the destination will receive data only generated by enabled NFO Modules |

| Original NetFlow/IPFIX only | Indicates the destination for all flow data, translated into syslog or JSON, one-to-one. NetFlow/IPFIX Options from Original Flow Data translated into syslog or JSON, one-to-one, also sent to this output. Use this option to archive all underlying flow records NFO processes for forensics. This destination is typically Hadoop or another inexpensive storage, as the volume for this destination can be quite high |

| Original sFlow only | Indicates the destination for sFlow data, translated into syslog or JSON, one-to-one. Use this option to archive all underlying sFlow records NFO processes for forensics. This destination is typically configured to send output to inexpensive syslog storage, such as the volume for this destination can be quite high |

Module Filter

Here you can enter a comma-separated list of nfc_id for the Modules to be included in this output destination.